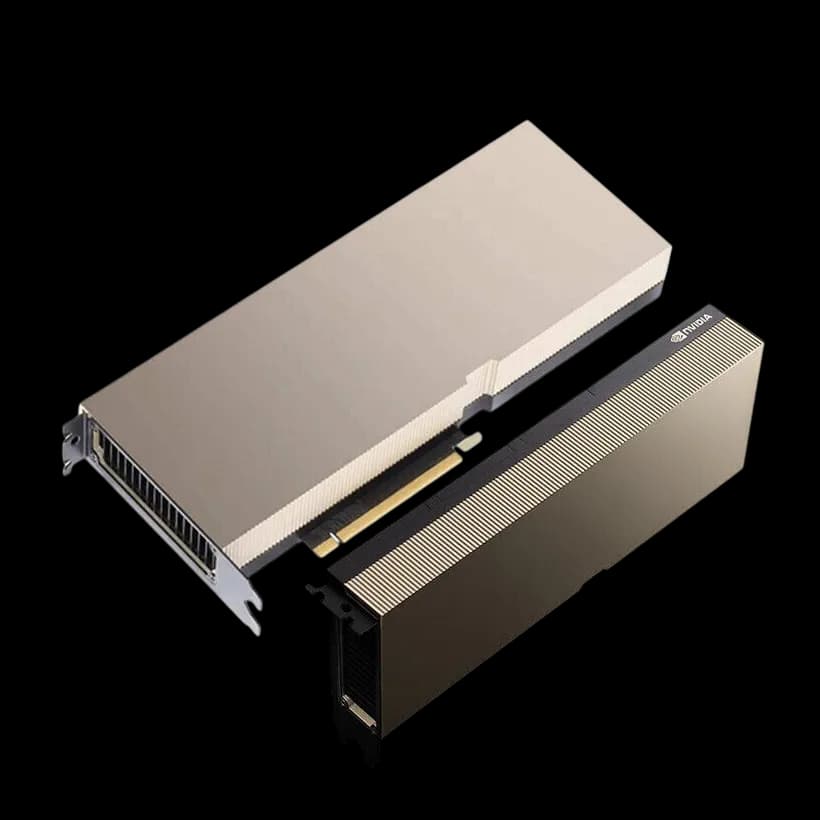

NVIDIA HGX A100 4-GPU Server Board | SXM4, 320GB Total

The NVIDIA HGX A100 4-GPU Server Board integrates four A100 SXM4 GPUs, totaling 320GB of HBM2e memory, designed specifically for enterprise data centers handling massive AI workloads. This board supports extensive NVLink connections for seamless multi-GPU scalability, ideal for high-performance AI training, inferencing, and HPC applications demanding immense compute power and reliability.

NVIDIA HGX A100 4-GPU Server Board | SXM4, 320GB Total

The NVIDIA HGX A100 4-GPU Server Board integrates four A100 SXM4 GPUs, totaling 320GB of HBM2e memory, designed specifically for enterprise data centers handling massive AI workloads. This board supports extensive NVLink connections for seamless multi-GPU scalability, ideal for high-performance AI training, inferencing, and HPC applications demanding immense compute power and reliability.

Similar Products

Powered by NVIDIA — Driving the Future of AI & Quantum

NVIDIA’s advanced GPUs provide unmatched performance for deep learning, scientific computing, and HPC. With cutting-edge NVLink scalability and CUDA acceleration, these chips not only enable state-of-the-art AI solutions but also pave the way for breakthroughs in quantum computing, making them the backbone of the next era of computation.